This page contains sample videos and illustrations from my work on various research projects. I’ll try to keep it updated with interesting findings as soon as they are available. For any questions or suggestions I would be glad to hear from you, so feel free to contact me.

Smoke detection in endoscopic surgery videos

Event-based annotation of surgical operations has not received much attention mainly due to diversity of the visual content. As a first approach to retrieval of surgical events we have addressed the problem of smoke detection in endoscopic surgery videos. The proposed algorithm works by classifying (with one-class support vector machines) optical flow features extracted from elementary video shots. The following video depicts the underlying concept. In particular, after video segmentation, each shot is played twice showing the spatial distribution of all particles and then the trajectories of those particles that are moving actively. Note how chaotic the trajectories become due to the presence of smoke.

Related work: C. Loukas, et al., ‘Smoke detection in endoscopic surgery videos: a first step towards retrieval of semantic events, Int J Med Robot Comput Assist Surg, 11(1), 80-94, 2015. Abstract.

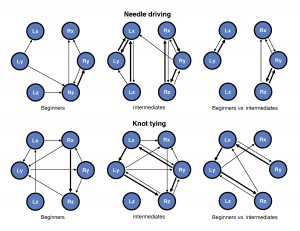

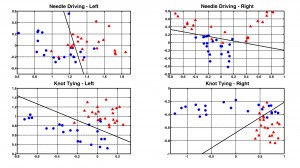

Analysis of hand kinematics-Surgical skill assessment

We have developed techniques for analyzing the hand kinematics of surgical trainees based on multivariate autoregressive (MAR) models. In addition to surgical experience recognition, commonly achieved by methods such as HMMs, MAR models are able to provide an insight on how surgical gestures are performed during a task or even an entire operation. This gesture performance information is based on the concept of Hand Motion Network (HMN) that depicts the magnitude of connectivity/synchronization between kinematic signal sources. HMNs have been extracted for basic surgical tasks such as knot tying and needle driving, as well as for the key phases of laparoscopic cholecystectomy. Related works:

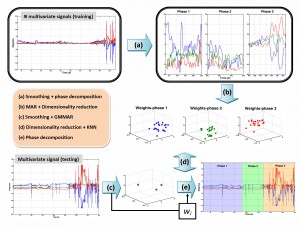

Surgical Workflow Analysis (SWA)

The MAR model has also been combined with Gaussian mixtures providing the so called GMMAR model, which has been employed for segmenting a surgical operation into its key phases for applications related to surgical workflow analysis and surgical process modeling. Unlike previous works in this area that require knowledge of the type of tools employed at each time step of the operation, our technique employs signals from orientation sensors attached to the endoscopic instruments. The basic idea is that each phase of the operation is characterized by a unique pattern of surgical gestures that is captured by the GMMAR process. A graphical abstract of the method is provided below.

Related work: C. Loukas, et al., ‘Surgical workflow analysis with Gaussian mixture multivariate autoregressive (GMMAR) models: a simulation study’, Computer Aided Surgery, 18(3-4):47-62, 2013. Abstract

Endoscopic Instrument Tracking

Knowing the position of the endoscopic instruments during a surgical task is an important issue with several applications in minimally invasive surgery (e.g. evaluation of surgical skills). One way to achieve this is to use electromechanical position sensors. An alternative approach, yet more challenging and without prerequisites such as attaching sensors to the tools, is to use purely visual information obtained from the endoscopic camera. To this direction, an instrument tracking algorithm based on Kalman filtering has been developed. The algorithm exploits the linear shape of the endoscopic tool in order to track its edges in the Hough parameter space.

The following video shows some preliminary results on instrument tracking with Kalman filtering. The training task requires to place a series of pegs on a pegboard (peg-transfer task). The green lines indicate the instrument edges tracked in the Hough parameter space (measurements), whereas the red line is predicted via Kalman filtering of the measurements.

Update: Check out our YouTube channel for several videos related to real-time visual tracking and pose estimation of the endoscopic instruments. The algorithm has been designed mainly for Augmented Reality applications in surgical simulation training.

Related work: C. Loukas, et al., ‘An integrated approach to endoscopic instrument tracking for augmented reality applications in surgical simulation training’, Int J Med Robot Comput Assist Surg, 9(4):e34-51, 2013. Abstract

Prior to this work I experimented on robotic instrument tracking but without Kalman filtering (just tracking in color space). The following video is an interesting demonstration from a cholecystectomy (unpublished data). Below the window showing the video one can see how the trajectory of the arm unfolds. The arm is tracked with a green cross inside a rectange.

Brain Signal Analysis

N400 & Independent Component Analysis

EEG waves modulated by context are identified about 400 ms after presentation of a new semantic stimulus, such as a word or a number, within a prior context. However, it is not known if any component of these waves arises from a common brain system activated by different symbolic forms. Research experiments on healthy subjects provided evidence that one element of the activity contributing to the N400 is common to different symbolic forms. The possibility that a part of the scalp N400 potentials recorded with different symbolic forms represents activity in a common neural circuit was investigated by unmixing the EEG signals through independent component analysis (ICA, a mathematical technique that solves the ‘cocktail-party-problem’).

The following video shows the evolution of the N400 activity after presentation of a semantic stimulus (e.g. 1, 2 3, 4,…400). Time is shown on the bottom right corner (in sec). One can see an escalation of the brain activity after about 400 ms.

Related work: N. Fogelson, C. Loukas, J. Brown, P. Brown. A common N400 EEG component reflecting contextual integration irrespective of symbolic form. Clin Neurophysiol. 2004; 115(6): 1349-58. Abstract

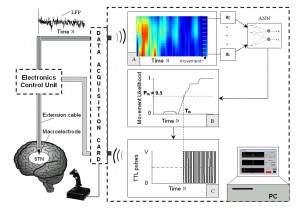

Movement prediction based on real-time processing of deep brain signals

There is much current interest in deep brain stimulation of the subthalamic nucleus (STN) in the treatment of Parkinson’s disease. Offline processing of the local field potentials (LFP) recorded with implanted macroelectrodes has shown a characteristic oscillation in the beta band (13–40 Hz). Based on this evidence a software application was developed for predicting the occurrence of self-paced hand-movements. The LFP signals obtained in real-time from the STN via implanted macroelectrodes were used as input to the system. The algorithm is based on signal processing with wavelets and artificial neural networks in order to generate a temporal probability for a potential hand-movement occurring within the next 1-2 sec. Note that the movements were self-paced (i.e. no warning signal to perform a movement is given to the patient). The following figure shows a schematic diagram of the overall methodology.

Next is a sample video of hand-movement prediction (in slow motion). The three processes plotted on the GUI of the system developed (ZEUS), show: the LFP signal (top), joystick movement (signified by a change in the amplitude), and movement prediction probability (an offset of 1 was added, so p ranges from 1: lowest probability to observe a hand movement within the next 2 sec, and 2: highest probability). In this case the prediction is made about 0.5 second in advance (x-axis’ length = 256 scans = 500 ms ). The system also generates a short pulse of 5 μV that stimulates the STN, via the same electrode used for acquisition, during prediction and it is indicated by the ‘cyan’ pulse on the LFP plot.

Related work: C. Loukas and P. Brown, Online prediction of self-paced hand-movements from subthalamic activity using neural networks in Parkinson’s disease. J Neurosci Methods. 2004; 137(2): 193-205. Abstract

Microscopic Image Analysis

On my early days in research I developed image analysis algorithms based on established machine learning and pattern recognition techniques in order to assess crucial biological features that influence the outcome of radiotherapy (this was actually my PhD thesis!). The following figures show snapshots from an expert system designed to provide diagnostic support to clinical researchers regarding the morphology of a tumor in a tissue section sample that was preprocessed with novel biological markers.

Related works: